Cerebras Systems unveils the Wafer Scale Engine 3 (WSE-3), the world’s fastest AI chip, marking a significant advancement in AI hardware technology. With 4 trillion transistors, the WSE-3 is designed for the most demanding AI computations, offering superior efficiency and seamless integration into AI supercomputers like the Cerebras CS-3. This breakthrough underscores Cerebras’ ongoing commitment to innovation in AI technology and its strategic collaborations with key industry and academic partners.

Main Points

Introduction of WSE-3

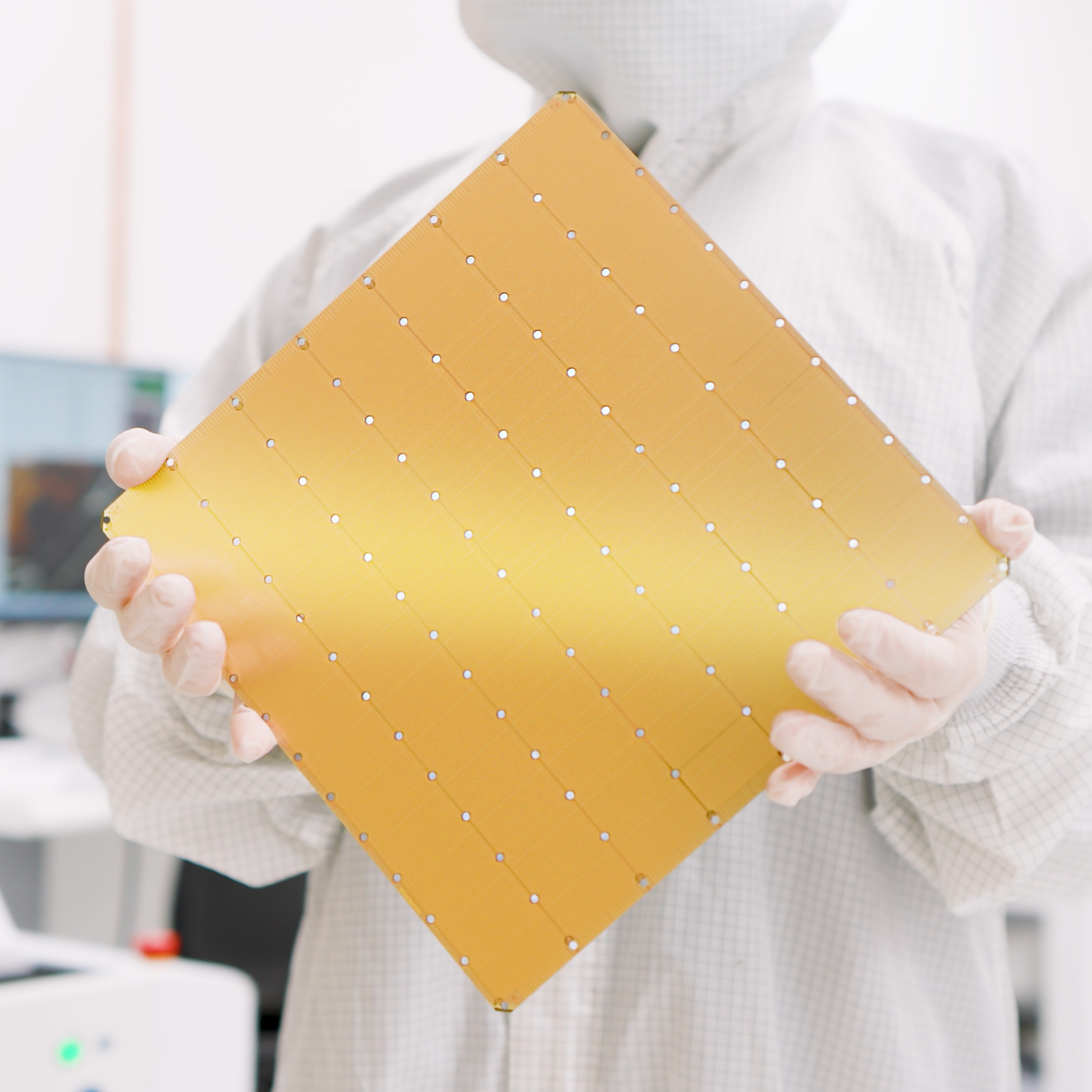

Cerebras Systems has introduced the Wafer Scale Engine 3 (WSE-3), a significant improvement over its predecessor, enabling twice the performance at the same power draw and cost.

WSE-3's advanced technology

The WSE-3, with its 4 trillion transistors and advanced technology, is built to prioritize AI workloads, especially for training large AI models.

Benefits of CS-3 supercomputer

CS-3 supercomputer, powered by WSE-3, simplifies workflows for AI model training, benefiting hugely from the improvements in processing efficiency.

Strategic partnerships enhance AI research

Strategic partnerships with entities such as G42 and academic institutions underscore Cerebras’ commitment to AI research and commercial AI application.

Insights

Cerebras Systems doubled down on its existing world record

The Cerebras Systems, with the introduction of the Wafer Scale Engine 3 (WSE-3), has achieved doubling the performance of its previous record-holder (WSE-2), maintaining the same power draw and price.

WSE-3 is purpose-built for the largest AI models

The Wafer Scale Engine 3, constructed with a 5nm technology and 4 trillion transistors, is designed specifically for training the industry’s largest AI models.

WSE-3 powers Cerebras CS-3 AI supercomputer

Delivering 125 petaflops of peak AI performance through 900,000 AI optimized compute cores, the WSE-3 drives the capabilities of the Cerebras CS-3 AI supercomputer.

Superior power efficiency and easy software usage

While GPUs power consumption is doubling per generation, the CS-3 offers more compute performance with less space and power and requires 97% less code than GPUs for LLMs.

Global strategic partnerships foster innovation and AI advancements

Through strategic collaboration with organizations like G42, and academic institutions, Cerebras Systems bolsters the development of novel AI models and solutions at a global scale.

www.cerebras.net

www.cerebras.net