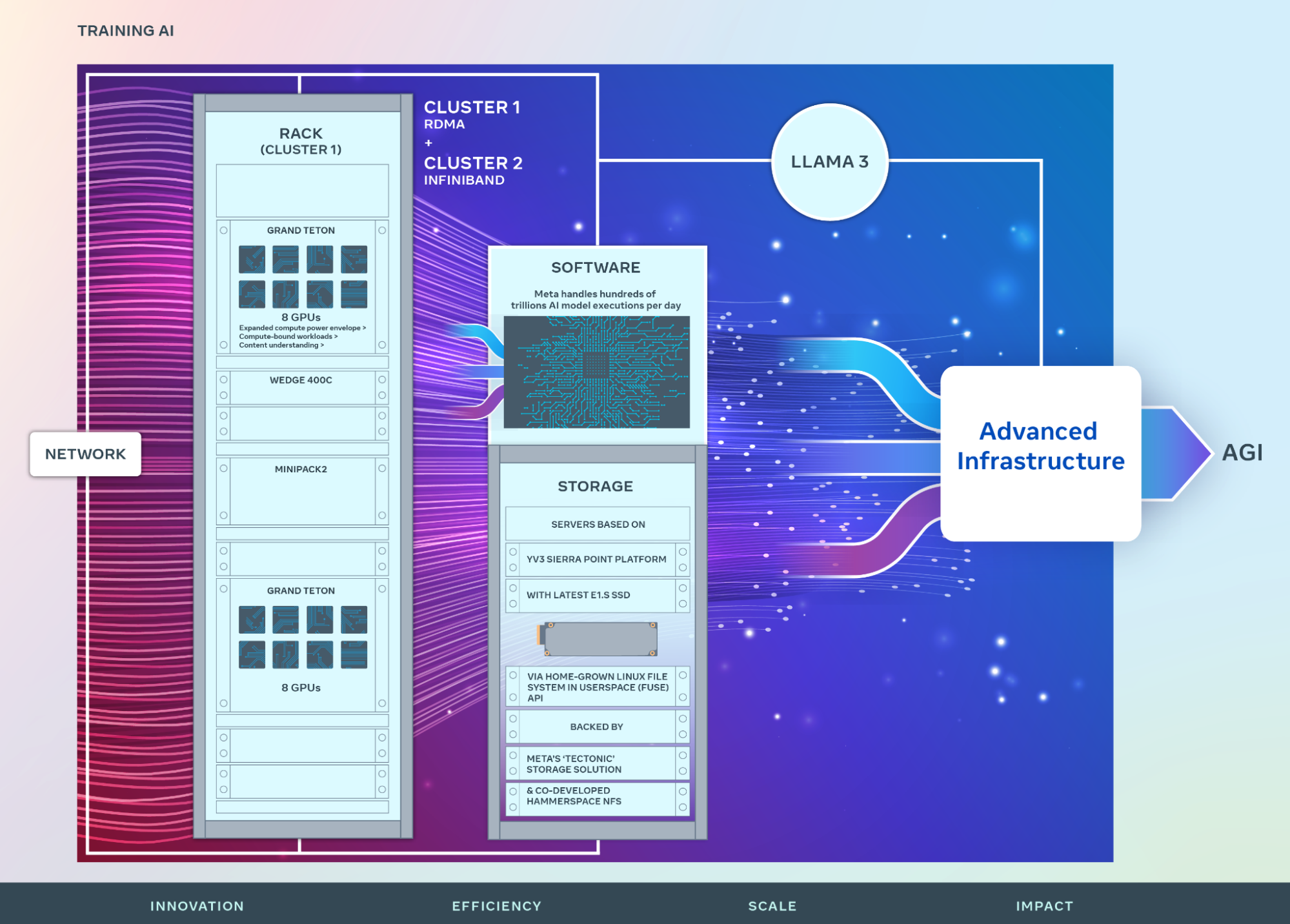

Meta announces the construction of two 24k GPU clusters as a significant investment in its AI future, emphasizing its commitment to open compute, open source, and a forward-looking infrastructure roadmap aimed at expanding its compute power significantly by 2024. The clusters support current and future AI models, including Llama 3, and are built using technologies such as Grand Teton, OpenRack, and PyTorch.

Main Points

Meta announces two 24k GPU clusters for AI workloads, including Llama 3 training

Marking a major investment in Meta’s AI future, we are announcing two 24k GPU clusters. We are sharing details on the hardware, network, storage, design, performance, and software that help us extract high throughput and reliability for various AI workloads. We use this cluster design for Llama 3 training.

Commitment to open compute and open source with Grand Teton, OpenRack, and PyTorch

We are strongly committed to open compute and open source. We built these clusters on top of Grand Teton, OpenRack, and PyTorch and continue to push open innovation across the industry.

Meta's roadmap includes expanding AI infrastructure to 350,000 NVIDIA H100 GPUs by 2024

This announcement is one step in our ambitious infrastructure roadmap. By the end of 2024, we’re aiming to continue to grow our infrastructure build-out that will include 350,000 NVIDIA H100 GPUs as part of a portfolio that will feature compute power equivalent to nearly 600,000 H100s.

Insights

Meta's major investment in AI infrastructure involves building two 24k GPU clusters for various AI workloads including Llama 3 training.

Marking a major investment in Meta’s AI future, we are announcing two 24k GPU clusters. We use this cluster design for Llama 3 training.

Meta commits to open compute and open source, building these clusters with Grand Teton, OpenRack, and PyTorch.

We built these clusters on top of Grand Teton, OpenRack, and PyTorch and continue to push open innovation across the industry.

Meta plans to grow its AI infrastructure to include 350,000 NVIDIA H100 GPUs by the end of 2024.

By the end of 2024, we’re aiming to continue to grow our infrastructure build-out that will include 350,000 NVIDIA H100 GPUs as part of a portfolio that will feature compute power equivalent to nearly 600,000 H100s.