-

AI Artificial Intelligence Big Tech censorship CIA corporate media disinformation FBI First Amendment free speech hoaxes intelligence agencies Internet Iron Curtain media bias Media Criticism Mike Benz misinformation Missouri v. Biden Murthy v. Missouri National Science Foundation NewsGuard NSA spy agencies Tucker Carlson AI and Machine Learning

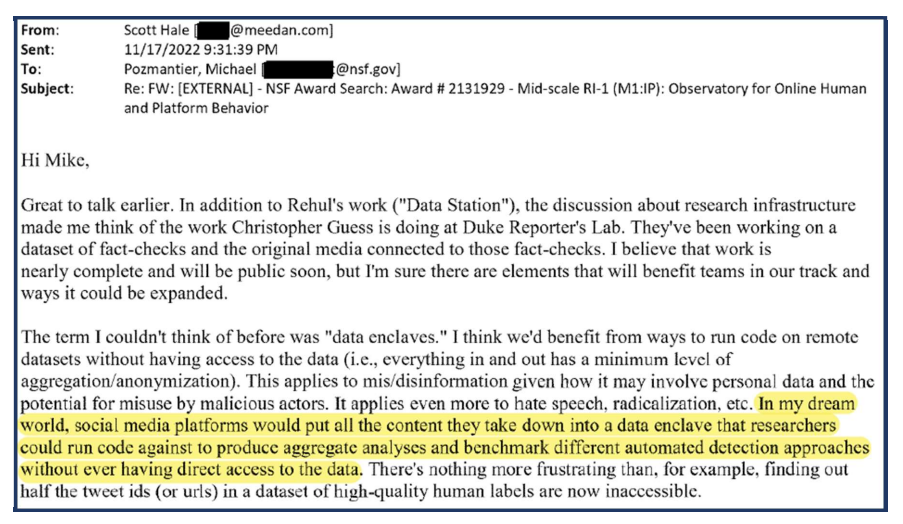

The article discusses how AI censorship tools, funded in part by the federal government, are being used to control the visibility of information online, particularly information that opposes government and corporate media narratives. It highlights the concerns raised about freedom of speech, government transparency, and the potential impact on public opinion. The article also touches on the involvement of federal agencies in these censorship efforts, raising questions about the constitutional implications of such actions.

Main Points- Control of InformationAI censorship tools are deployed to control the visibility of information, challenging the narrative of government and corporate media ahead of elections.

- Federal Funding and AI CensorshipThe federal government has invested in developing AI tools for internet censorship, raising concerns about freedom of speech.

- Targets of AI CensorshipAI censorship efforts include targeting misinformation and managing public opinion through restricting or promoting certain viewpoints.

- Government Involvement in CensorshipFederal agencies have been involved in censorship efforts, raising significant concerns about constitutional rights and government transparency.

122004763 -

Alan Kay explores a range of topics in a talk at UCLA, moving from the impact of graphical user interfaces to the implications of AI and the relationship between technology practitioners and theorists. He stresses the transformative power of GUIs, the unpredictability of AI, and calls for a more integrated approach to technology development.

Main Points- GUIs' critical role in tech industryAlan Kay discusses the importance of GUIs for the success of the computing industry, reflecting on his experiences at Xerox Park.

- AI's potential misinformation risksKay warns about the potential dangers of unrestrained AI development and misuse, using an example of a chatbot going awry.

- Tech theory-practice splitKay points to a disconnect between technological practitioners and theorists, suggesting a stronger role for government and education in bridging this gap.

122004763 -

GitHub - trevorpogue/algebraic-nnhw: AI acceleration using matrix multiplication with half the multiplications (github.com)AI Acceleration machine learning Algorithm architecture Source Code AI and Machine Learning Artificial Intelligence

This GitHub repository presents transformative advancements in machine learning accelerator architectures through a novel algorithm, the Free-pipeline Fast Inner Product (FFIP), which demands nearly half the number of multiplier units for equivalent performance, trading multiplications for low-bitwidth additions. It includes complete source code for implementing the FFIP algorithm and architecture, aimed at enhancing the computational efficiency of ML accelerators.

Main Points- FFIP Algorithm and ArchitectureThe repository delivers a novel algorithm (FFIP) alongside a hardware architecture that enhances the compute efficiency of ML accelerators by reducing the number of necessary multiplications.

- Applicability and Performance of FFIPThe FFIP algorithm is applicable across various machine learning model layers and has been shown to outperform existing solutions in throughput and compute efficiency.

- Comprehensive Source Code for ImplementationThe source code provides a comprehensive setup for implementation including a compiler, RTL descriptions, simulation scripts, and testbenches.

122004763 -

AI Meta GPU Clusters Open Source Infrastructure AI and Machine Learning Technology Artificial Intelligence

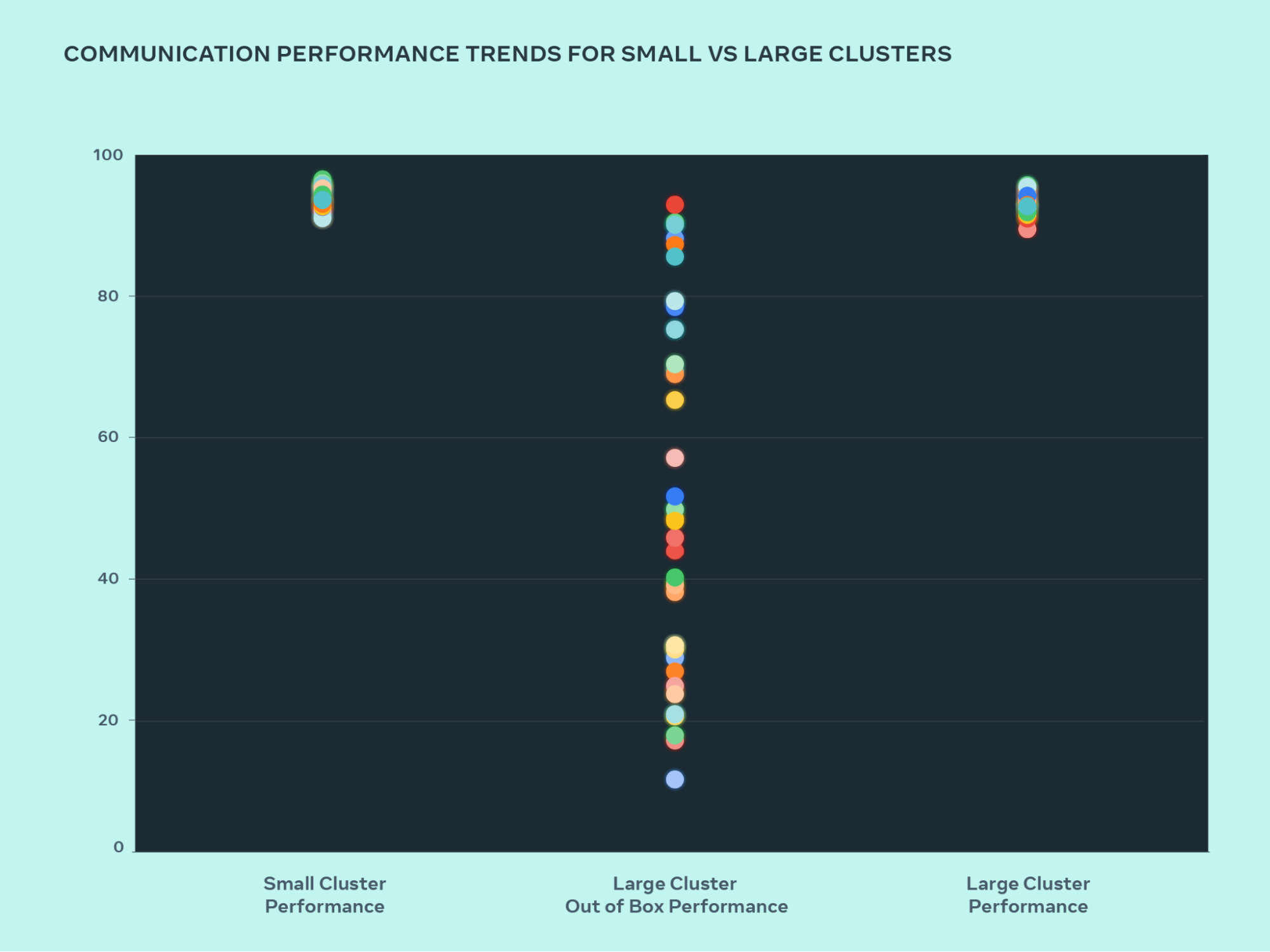

Meta announces the construction of two 24k GPU clusters as a significant investment in its AI future, emphasizing its commitment to open compute, open source, and a forward-looking infrastructure roadmap aimed at expanding its compute power significantly by 2024. The clusters support current and future AI models, including Llama 3, and are built using technologies such as Grand Teton, OpenRack, and PyTorch.

Main Points- Meta announces two 24k GPU clusters for AI workloads, including Llama 3 trainingMarking a major investment in Meta's AI future, we are announcing two 24k GPU clusters. We are sharing details on the hardware, network, storage, design, performance, and software that help us extract high throughput and reliability for various AI workloads. We use this cluster design for Llama 3 training.

- Commitment to open compute and open source with Grand Teton, OpenRack, and PyTorchWe are strongly committed to open compute and open source. We built these clusters on top of Grand Teton, OpenRack, and PyTorch and continue to push open innovation across the industry.

- Meta's roadmap includes expanding AI infrastructure to 350,000 NVIDIA H100 GPUs by 2024This announcement is one step in our ambitious infrastructure roadmap. By the end of 2024, we’re aiming to continue to grow our infrastructure build-out that will include 350,000 NVIDIA H100 GPUs as part of a portfolio that will feature compute power equivalent to nearly 600,000 H100s.

122004763 -

Thermodynamic Computing: Better than Quantum? | Guillaume Verdon and Trevor McCourt, Extropic - YouTube (youtube.com)Thermodynamic Computing Probabilistic Machine Learning Quantum Computing AI and Machine Learning Extropic Technology philosophy Artificial Intelligence Personal Growth social change

Extropic, a new venture mentioned in the First Principles podcast, is developing a pioneering computing paradigm that leverages noise and thermal fluctuations for computation. Unlike traditional quantum computing, which requires extreme cooling and faces scalability issues due to quantum decoherence, Extropic’s model aims to harness these thermal effects to improve computing efficiency and scalability. This approach could prove especially beneficial for AI and machine learning applications, offering a new direction for computational technology.

Main Points- Introduction of a new computing paradigm by Extropic.Extropic is exploring a new paradigm of computing that harnesses environmental noise, specifically thermal fluctuations, to perform computations. This approach aims to surpass the limitations of current quantum and deterministic computing by leveraging the inherent probabilistic nature of thermodynamic processes.

- Challenges in current computing technologies and Extropic's alternative approach.Current computing technologies, including quantum computing, face scalability challenges due to requirements for extreme cooling and complex error correction processes. Extropic's approach aims to embrace noise and thermal fluctuations, offering a more scalable and efficient computing model.

- Prospects of thermodynamic computing in AI and machine learning.Extropic's computing model is anticipated to provide substantial benefits for AI and machine learning, especially in applications that require probabilistic reasoning and low data regimes. This positions thermodynamic computing as a potential game-changer for future technological advancements in AI.

122004763 -

machine learning reinforcement learning simulation neural networks AI and Machine Learning Artificial Intelligence Technology

This article discusses the concept of world models - generative neural network models that allow agents to simulate and learn within their own dream environments. Agents can be trained to perform tasks within these simulations and then apply the learned policies in real-world scenarios. The study explores this approach within the context of reinforcement learning environments, highlighting its potential for efficient learning and policy transfer. The integration of iterative training procedures and evolution strategies further supports the scalability and applicability of this method to complex tasks.

Main Points- World Models as Training EnvironmentsWorld models enable agents to train in simulated environments or 'dreams' which are generated from learned representations of real-world data.

- Applicability of Dream-learned PoliciesBy training within these dream environments, agents can develop policies that are applicable to real-world tasks without direct exposure, showcasing a novel form of learning efficiency.

- Evolution Strategies for Policy OptimizationIncorporation of Evolution Strategies alongside world models presents a scalable method for optimizing agent behaviors within complex, simulated environments.

122004763 -

Zed coding environment performance collaboration AI GPUI Rust CRDT Artificial Intelligence AI and Machine Learning

Zed is a high-performance coding environment that leverages CPU and GPU power, integrates AI for code generation, and emphasizes collaboration with features like real-time code editing and shared workspaces. It introduces advancements such as GPUI framework for smooth rendering and uses Rust for multi-core processing efficiency. Zed also features a full syntax tree for enhanced coding assistance and supports conflict-free collaborative coding through CRDTs.

Main Points- Engineered for PerformanceZed efficiently leverages every CPU core and your GPU to start instantly, load files in a blink, and respond to keystrokes on the next display refresh.

- Intelligence on TapSave time and keystrokes by generating code with AI. Zed supports GitHub Copilot out of the box, and you can use GPT-4 to generate or refactor code by pressing ctrl-enter and typing a natural language prompt.

- Language-awareZed maintains a full syntax tree for every buffer as you type, enabling precise code highlighting, auto-indent, a searchable outline view, and structural selection.

- Connect with your TeamWith Zed, multiple developers can navigate and edit within a shared workspace.

122004763

We can't find the internet

Attempting to reconnect

Something went wrong!

Hang in there while we get back on track

Tags

see all- Technology

- AI

- Open Source

- programming

- AI and Machine Learning

- Elixir

- Artificial Intelligence

- philosophy

- Personal Growth

- Rust

- business

- social change

- software development

- Python

- machine learning

- innovation

- Linux

- DIY

- collaboration

- Nominatim

- architecture

- Entertainment

- performance improvement

- performance

- education

- Android

- PostgreSQL

- Docker

- Deadspin

- ActivityPub

- Slint

- Developer Experience

- NixOS

- SQL

- Drone

- neural networks

- simulation

- Erlang

- Journalism

- Entrepreneurship

- LLM

- scalability

- Concurrency

- fault tolerance

- immutable URLs

- Nix

- productivity

- WebAssembly

- Database

- Generative AI

- Security

- Home Lab

- deployment

- ESP32

- Language Models

- automation

- Data Visualization

- Markets

- Networking

- Ecto

- computing

- BEAM

- metal recovery

- Quantum Computing

- Robotics

- Web 2.0

- financial wellbeing

- harsh environments

- Miniaturization

- eclipse safety

- Public Health

- Web PKI

- Chess

- Transparency

- memory allocation

- safety

- brinespace

- Diversity

- brewer's yeast

- hardware review

- Cryptanalysis

- compiler backend

- anterior neostriatum

- compression

- Psychology

- Vulnerabilities

- computational complexity

- luxury

- Windows

- Natural Language Processing

- cloud native

- Legal Battle

- maintainability

- Lifestyle

- US Jobs Market

- Mike Benz

- dinosaurs

- Investment Strategy

- Xerox Park

- design

- Bletchley Park

- gaming

- Consumer Advice

- AI Democratization

- Economy

- Behavioral Biology

- Particles

- Parallelism

- brain abnormalities

- Query Optimization

- Church Growth

- Mathematics

- WWII

- problem-solving

- Cerebras Systems

- structured inference

- API

- LaVague

- mechanical design

- UNIX

- Certificate Transparency

- Ericsson

- Iman Gadzhi

- Thermodynamic Computing

- Nvidia

- electrolyte solutions

- setup simplicity

- Synchronization Primitives

- Wasmtime

- work-life balance

- Big Tech

- LLMs

- Twitch

- Tucker Carlson

- Garbage Collection

- machining

- Personal Reflection

- free speech

- Generosity

- Sports Stadiums

- Ash 3.0

- WebTransport

- celestial event

- ChatGPT

- processor-controlled

- computer graphics

- Actor model

- oral rehydration solution

- Missouri v. Biden

- password security

- Academic Freedom

- developer tools

- capitalism

- compilation

- total solar eclipse

- Performance Engineering

- Effective Methods

- 3D printing

- Wi-Fi Control

- hardware-controlled

- S&P 500

- Telemetry

- Encoding

- Theology

- First Amendment

- ISO GQL

- Web Monitoring

- Data Compression

- development environment management

- intelligence agencies

- Satire

- Divine Healing

- NSA

- WSE-3

- corporate media

- YouTube

- Project Management

- Sunlight

- basal ganglia

- constraints

- Online Learning

- Mobile App Development

- Medical Technology

- teamwork

- Server Management

- High-Performance Computing

- aviation

- Hugo Barra

- processes

- GUI Toolkit

- Foreign Data Wrapper

- Debugging

- spiritual experience

- indie game development

- kernel exploitation

- note-taking

- Cost-saving

- biosorption

- shuffle

- Probabilistic Machine Learning

- Economic Trends

- Media Criticism

- CMOS integrated circuits

- GPU security

- Technology Criticism

- health benefits

- Upptime

- Flutter

- Jensen Huang

- copyright

- Logging

- Memory Tagging Extension

- streaming service

- undecidability

- Enigma

- Speculative Execution

- remote work

- Coding

- Social Responsibility

- Corporate Profits

- Adm. Hyman G. Rickover

- Cipher

- Stable Video 3D

- AI Chip

- Nostr

- boot sequence

- APIs

- Pixel 8

- management

- register allocation

- Jordan Peterson

- Non-Transformer Models

- NewsGuard

- Computer Systems Research

- Content Creation

- system administration

- navy

- Software Support

- nuclear submarine

- Veeps

- Elisp

- StashPad Docs

- Truth

- Pentecostal Movement

- Categorical Thinking

- Deglobalization

- live sports

- Annual Letter

- cholera

- GitHub Actions

- Vaccine Mandates

- Flox

- devops

- Containerization

- E-graphs

- NP class

- Data Contamination

- Function Calling

- table formatting

- treatment

- Internet Iron Curtain

- Extropic

- AR

- Ovid

- development

- Federated System

- National Science Foundation

- Operating Systems

- functional programming

- Cypher

- JIT Compiler

- community discourse

- virtual environment

- Nostalgia

- RISC

- time management

- WebRTC

- Microsoft Research

- CIA

- AMD Graphics Cards

- Murthy v. Missouri

- superoptimization

- Geocoding

- GUI

- package manager

- Chips

- Evangelism

- low-cost project

- Wikipedia

- mammals

- communication

- Standards

- library

- OpenAI

- Selenium

- weight loss

- workplace culture

- Cranelift

- diagnosis

- pattern-matching

- troubleshooting

- Handheld Devices

- Circuit Digest

- Statistical Analysis

- AppSignal

- Parallel Computing

- titanosaurs

- spy agencies

- Meta

- The New York Times

- Torsion Springs

- Deep Packet Inspection

- leadership

- Growth

- OpenStreetMap

- Zed

- Ollama

- Let's Encrypt

- Richard Stallman

- GPU Clusters

- life advice

- Garnet

- software failure

- COVID-19

- LiveView

- Turing Machines

- federated models

- operating system

- GPUI

- Long-Polling

- text renderer

- software

- flash memory

- message passing

- AI-driven development

- Genetics

- community building

- schema migration

- cache-store

- Live-Preview

- evaluation

- BSD

- real-time communication

- software engineering

- video games

- hoaxes

- Church-Turing Thesis

- Algorithm

- Human Behavior

- workflows

- conditional

- Log-Normal Distribution

- CRDT

- AI Supercomputers

- Tablex

- FBI

- Garage Door Repair

- TypeScript

- Technology Trends

- Software Release

- pcase

- e-waste management

- N-channel diamond MOSFET

- Hollywood

- Lockdowns

- reptiles

- coding environment

- asynchronous communication

- Emacs

- Uppttime

- biometrics

- Financial Woes

- Blackwell

- 3D generation

- Learning Curve

- computational models

- Structured Logging

- Berkeley

- Server-Sent Events

- Race Conditions

- The Jet Business

- misinformation

- Stashpad

- Customization

- bitfield

- software critique

- Stability AI

- reinforcement learning

- VIBES

- immersive technology

- UI development

- Fingerprinting

- In-Context Learning

- scaling

- SIMA

- Regression Analysis

- Techno-Optimism

- Algorithm Design

- AT&T

- AI Acceleration

- Domain Modeling

- RP2040

- incident commander

- Personal Pronouns

- Ultrasound

- OpenVPN

- diamond electronics

- Hardware Selection

- theoretical computer science

- Notifications

- dehydration

- execution plan

- CVE-2023-6241

- animal behavior

- Google Docs alternative

- macOS

- Patience

- developmental language disorder

- Culture

- Johnny Depp

- hash table

- Sports

- browser automation

- Vision Pro

- nuclear navy

- authentication

- Database Innovation

- knowledge sharing

- Technology History

- virtual reality

- Xerox PARC

- UI/UX Design

- Transition

- WebSockets

- VS Code

- Computer Science

- cryptography

- .NET

- Daytona

- Android security

- Optimization

- PRQL

- Servers

- media bias

- Neovim

- VPN

- code-generation

- Design Systems

- censorship

- user code execution

- Capabilities

- Queries

- game design

- randomness

- x86

- Wafer Scale Engine 3

- lawsuit

- Source Code

- AI agent

- private jet

- PCB design

- Redis

- graph theory

- system design

- Abundance

- Cybersecurity

- sustainable technology

- Tools Format

- Phoenix

- system efficiency

- MEMS

- Decision Table

- Alan Kay

- Public Image

- Management Disputes

- computer vision

- Graph Database

- cond*

- Infrastructure

- Encyclopedia

- VR

- ARPA

- web development

- Apple

- Hermes-2-Pro-Mistral-7B

- DuckDB

- Unison

- disinformation

- sauropod

- Computability

- Feature Learning