-

AI Meta GPU Clusters Open Source Infrastructure AI and Machine Learning Technology Artificial Intelligence

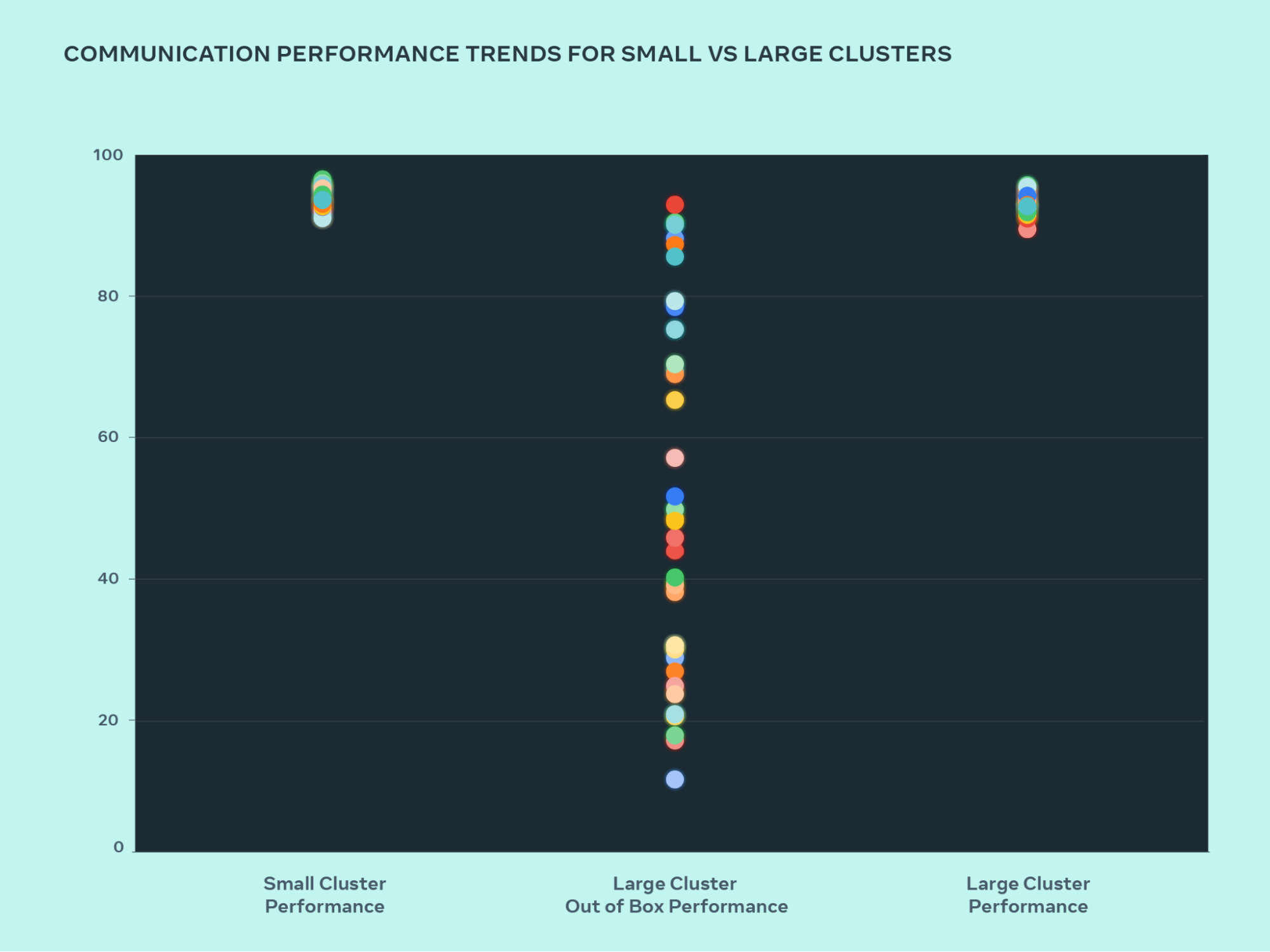

Meta announces the construction of two 24k GPU clusters as a significant investment in its AI future, emphasizing its commitment to open compute, open source, and a forward-looking infrastructure roadmap aimed at expanding its compute power significantly by 2024. The clusters support current and future AI models, including Llama 3, and are built using technologies such as Grand Teton, OpenRack, and PyTorch.

Main Points- Meta announces two 24k GPU clusters for AI workloads, including Llama 3 trainingMarking a major investment in Meta's AI future, we are announcing two 24k GPU clusters. We are sharing details on the hardware, network, storage, design, performance, and software that help us extract high throughput and reliability for various AI workloads. We use this cluster design for Llama 3 training.

- Commitment to open compute and open source with Grand Teton, OpenRack, and PyTorchWe are strongly committed to open compute and open source. We built these clusters on top of Grand Teton, OpenRack, and PyTorch and continue to push open innovation across the industry.

- Meta's roadmap includes expanding AI infrastructure to 350,000 NVIDIA H100 GPUs by 2024This announcement is one step in our ambitious infrastructure roadmap. By the end of 2024, we’re aiming to continue to grow our infrastructure build-out that will include 350,000 NVIDIA H100 GPUs as part of a portfolio that will feature compute power equivalent to nearly 600,000 H100s.

122004763

We can't find the internet

Attempting to reconnect

Something went wrong!

Hang in there while we get back on track

Tags

see all- Technology

- AI

- Open Source

- programming

- AI and Machine Learning

- Elixir

- Artificial Intelligence

- philosophy

- Personal Growth

- Rust

- business

- social change

- software development

- Python

- machine learning

- innovation

- Linux

- DIY

- collaboration

- Nominatim

- architecture

- Entertainment

- performance improvement

- performance

- education

- Android

- PostgreSQL

- Docker

- Deadspin

- ActivityPub

- Slint

- Developer Experience

- NixOS

- SQL

- Drone

- neural networks

- simulation

- Erlang

- Journalism

- Entrepreneurship

- LLM

- scalability

- Concurrency

- fault tolerance

- immutable URLs

- Nix

- productivity

- WebAssembly

- Database

- Generative AI

- Security

- Home Lab

- deployment

- ESP32

- Language Models

- automation

- Data Visualization

- Markets

- Networking

- Ecto

- computing

- BEAM

- metal recovery

- Quantum Computing

- Robotics

- Web 2.0

- financial wellbeing

- harsh environments

- Miniaturization

- eclipse safety

- Public Health

- Web PKI

- Chess

- Transparency

- memory allocation

- safety

- brinespace

- Diversity

- brewer's yeast

- hardware review

- Cryptanalysis

- compiler backend

- anterior neostriatum

- compression

- Psychology

- Vulnerabilities

- computational complexity

- luxury

- Windows

- Natural Language Processing

- cloud native

- Legal Battle

- maintainability

- Lifestyle

- US Jobs Market

- Mike Benz

- dinosaurs

- Investment Strategy

- Xerox Park

- design

- Bletchley Park

- gaming

- Consumer Advice

- AI Democratization

- Economy

- Behavioral Biology

- Particles

- Parallelism

- brain abnormalities

- Query Optimization

- Church Growth

- Mathematics

- WWII

- problem-solving

- Cerebras Systems

- structured inference

- API

- LaVague

- mechanical design

- UNIX

- Certificate Transparency

- Ericsson

- Iman Gadzhi

- Thermodynamic Computing

- Nvidia

- electrolyte solutions

- setup simplicity

- Synchronization Primitives

- Wasmtime

- work-life balance

- Big Tech

- LLMs

- Twitch

- Tucker Carlson

- Garbage Collection

- machining

- Personal Reflection

- free speech

- Generosity

- Sports Stadiums

- Ash 3.0

- WebTransport

- celestial event

- ChatGPT

- processor-controlled

- computer graphics

- Actor model

- oral rehydration solution

- Missouri v. Biden

- password security

- Academic Freedom

- developer tools

- capitalism

- compilation

- total solar eclipse

- Performance Engineering

- Effective Methods

- 3D printing

- Wi-Fi Control

- hardware-controlled

- S&P 500

- Telemetry

- Encoding

- Theology

- First Amendment

- ISO GQL

- Web Monitoring

- Data Compression

- development environment management

- intelligence agencies

- Satire

- Divine Healing

- NSA

- WSE-3

- corporate media

- YouTube

- Project Management

- Sunlight

- basal ganglia

- constraints

- Online Learning

- Mobile App Development

- Medical Technology

- teamwork

- Server Management

- High-Performance Computing

- aviation

- Hugo Barra

- processes

- GUI Toolkit

- Foreign Data Wrapper

- Debugging

- spiritual experience

- indie game development

- kernel exploitation

- note-taking

- Cost-saving

- biosorption

- shuffle

- Probabilistic Machine Learning

- Economic Trends

- Media Criticism

- CMOS integrated circuits

- GPU security

- Technology Criticism

- health benefits

- Upptime

- Flutter

- Jensen Huang

- copyright

- Logging

- Memory Tagging Extension

- streaming service

- undecidability

- Enigma

- Speculative Execution

- remote work

- Coding

- Social Responsibility

- Corporate Profits

- Adm. Hyman G. Rickover

- Cipher

- Stable Video 3D

- AI Chip

- Nostr

- boot sequence

- APIs

- Pixel 8

- management

- register allocation

- Jordan Peterson

- Non-Transformer Models

- NewsGuard

- Computer Systems Research

- Content Creation

- system administration

- navy

- Software Support

- nuclear submarine

- Veeps

- Elisp

- StashPad Docs

- Truth

- Pentecostal Movement

- Categorical Thinking

- Deglobalization

- live sports

- Annual Letter

- cholera

- GitHub Actions

- Vaccine Mandates

- Flox

- devops

- Containerization

- E-graphs

- NP class

- Data Contamination

- Function Calling

- table formatting

- treatment

- Internet Iron Curtain

- Extropic

- AR

- Ovid

- development

- Federated System

- National Science Foundation

- Operating Systems

- functional programming

- Cypher

- JIT Compiler

- community discourse

- virtual environment

- Nostalgia

- RISC

- time management

- WebRTC

- Microsoft Research

- CIA

- AMD Graphics Cards

- Murthy v. Missouri

- superoptimization

- Geocoding

- GUI

- package manager

- Chips

- Evangelism

- low-cost project

- Wikipedia

- mammals

- communication

- Standards

- library

- OpenAI

- Selenium

- weight loss

- workplace culture

- Cranelift

- diagnosis

- pattern-matching

- troubleshooting

- Handheld Devices

- Circuit Digest

- Statistical Analysis

- AppSignal

- Parallel Computing

- titanosaurs

- spy agencies

- Meta

- The New York Times

- Torsion Springs

- Deep Packet Inspection

- leadership

- Growth

- OpenStreetMap

- Zed

- Ollama

- Let's Encrypt

- Richard Stallman

- GPU Clusters

- life advice

- Garnet

- software failure

- COVID-19

- LiveView

- Turing Machines

- federated models

- operating system

- GPUI

- Long-Polling

- text renderer

- software

- flash memory

- message passing

- AI-driven development

- Genetics

- community building

- schema migration

- cache-store

- Live-Preview

- evaluation

- BSD

- real-time communication

- software engineering

- video games

- hoaxes

- Church-Turing Thesis

- Algorithm

- Human Behavior

- workflows

- conditional

- Log-Normal Distribution

- CRDT

- AI Supercomputers

- Tablex

- FBI

- Garage Door Repair

- TypeScript

- Technology Trends

- Software Release

- pcase

- e-waste management

- N-channel diamond MOSFET

- Hollywood

- Lockdowns

- reptiles

- coding environment

- asynchronous communication

- Emacs

- Uppttime

- biometrics

- Financial Woes

- Blackwell

- 3D generation

- Learning Curve

- computational models

- Structured Logging

- Berkeley

- Server-Sent Events

- Race Conditions

- The Jet Business

- misinformation

- Stashpad

- Customization

- bitfield

- software critique

- Stability AI

- reinforcement learning

- VIBES

- immersive technology

- UI development

- Fingerprinting

- In-Context Learning

- scaling

- SIMA

- Regression Analysis

- Techno-Optimism

- Algorithm Design

- AT&T

- AI Acceleration

- Domain Modeling

- RP2040

- incident commander

- Personal Pronouns

- Ultrasound

- OpenVPN

- diamond electronics

- Hardware Selection

- theoretical computer science

- Notifications

- dehydration

- execution plan

- CVE-2023-6241

- animal behavior

- Google Docs alternative

- macOS

- Patience

- developmental language disorder

- Culture

- Johnny Depp

- hash table

- Sports

- browser automation

- Vision Pro

- nuclear navy

- authentication

- Database Innovation

- knowledge sharing

- Technology History

- virtual reality

- Xerox PARC

- UI/UX Design

- Transition

- WebSockets

- VS Code

- Computer Science

- cryptography

- .NET

- Daytona

- Android security

- Optimization

- PRQL

- Servers

- media bias

- Neovim

- VPN

- code-generation

- Design Systems

- censorship

- user code execution

- Capabilities

- Queries

- game design

- randomness

- x86

- Wafer Scale Engine 3

- lawsuit

- Source Code

- AI agent

- private jet

- PCB design

- Redis

- graph theory

- system design

- Abundance

- Cybersecurity

- sustainable technology

- Tools Format

- Phoenix

- system efficiency

- MEMS

- Decision Table

- Alan Kay

- Public Image

- Management Disputes

- computer vision

- Graph Database

- cond*

- Infrastructure

- Encyclopedia

- VR

- ARPA

- web development

- Apple

- Hermes-2-Pro-Mistral-7B

- DuckDB

- Unison

- disinformation

- sauropod

- Computability

- Feature Learning